Would you believe eating chocolate could help you lose weight? Thousands of people around the world did. According to “experts” on global television news shows and outlets, including Shape magazine and Bild, Europe’s largest daily newspaper, a team of German researchers found that people on a low-carb diet lost weight 10 percent faster if they ate a chocolate bar every day. Not only does chocolate accelerate weight loss, the study found, but also it leads to healthier cholesterol levels and overall increased well-being. It sounds like great news. Unfortunately, it isn’t true.

Peter Onneken and Diana Lobl, a pair of German documentary filmmakers, came up with the idea for the study to show how easy it was for bad science to get published. Working with Science Magazine correspondent John Bohannon, the team created a website for a fake organization, the “Institute of Diet and Health,” and recruited a doctor and analyst to join in the hoax. They performed a real study, with real people, but did “a really bad job, on purpose, with the science,” Bohannon said in this CBSN interview. It’s a great illustration of how poorly executed research can appear to represent the truth if it isn’t examined closely—especially if it’s hyped by media and promotion.

Reporters should have used more rigor before promoting the study’s findings. Similarly, educators should examine research carefully before adopting new policies at schools. In this blog post, we’ll evaluate five research examples for quality.

Example 1: XYZ Math

"I am a supporter of bringing XYZ Math to Jones Elementary after seeing a huge success with it while I was assistant principal at Westside Elementary last year. We saw more than a 15% increase in our math scores in one year, and the only thing we did differently was use XYZ Math."

Elementary school assistant principal

This anecdotal testimonial about XYZ Math indicates that the program raised test scores. However, a more rigorous evaluation would be needed to make a strong conclusion about this. The assistant principal might not remember or recognize other changes that affected her students’ achievement. These could include changes in the student body, teacher experience, or other recent reforms.

Example 2: Education Journal Excerpt

During the 90-minute English/ language arts block at Northeast High, each of the 15 students in the remedial class uses a computer to strengthen basic skills, including decoding, reading fluency, vocabulary, and comprehension. An audio feature allows students to record themselves reading or listening to a taped version of the text.

The activities bolster group lessons on grammar, writing conventions, and literature, and equip students for tackling grade-level reading assignments independently. Ms. Garcia said nearly all the students advanced two grade levels or more in reading.

This article describes the perceived learning benefits of software, probably using the same description that the reading program company uses. However, other factors could have caused the gains. The article cites changes in reading level over time, but the changes could be due to many factors besides the program. The lack of a comparison group that did not receive the software program prevents us from knowing what would have happened without it.

Example 3: Blog Post Excerpt: Struggling Math Students Gain Using Personalized, Blended Program

Middle school students participating in a personalized, blended-learning math program showed increased gains in math skills – up to nearly 50 percent higher in some cases – over the national average, according to a new study from Teachers College, Columbia University.

During the 17-18 school year, students using XYZ Math gained math skills at a rate about 15% higher than the national average. In the second year of implementation, students made gains of about 47% above national norms, even though some of those students were still in their first year of using XYZ Math.

Is this conclusive evidence of the technology’s effectiveness? No. Other factors could have caused some of the gains. Because the comparison is not between groups constructed to be very similar, this is a correlational, rather than causal, analysis.

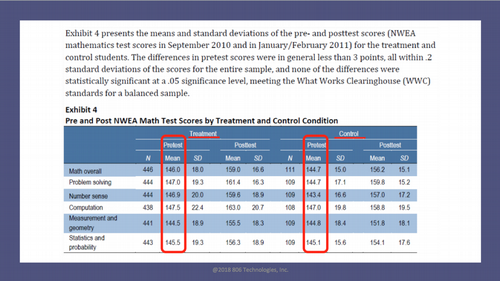

Example 4: Dream Box

The strength of the evidence on Dream Box’s impact relies on the fact that students in the study who used the program were very similar to those who did not; in other words, the sample was balanced across the user and comparison groups.

The paragraph and table show that this study met widely accepted standards for balance. In particular, the study found that students had similar scores on a baseline version of the test used to measure outcomes; this is generally considered the most important aspect of balance.

Example 5: Bedtime Math

In a series of studies on how adult anxieties and stereotypes affect students’ math performance, University of Chicago researchers found that students whose families used a free tablet app to work through math-related puzzles and stories each week had significantly more growth in math by the end of the year, particularly if their families were uncomfortable with the subject.

In the randomized controlled trial, Univ. of Chicago psychologists followed 587 1st graders and their families at 22 Chicago-area schools. The families were randomly assigned to use an iPad with either a reading-related app or Bedtime Math, a free app which provides story-like math word problems for parents to read with their children. The children were tested in math at the beginning and end of the school year.

Notably, the students of parents who admitted dreading math at the beginning of the year showed the strongest growth from using the app at least once a week. That’s important, since this study and prior research has shown parents who are highly anxious about math have children who show less growth in the subject and who are more likely to become fearful of the subject themselves.

The article above reports results from a randomized control trial (RCT), the gold standard in causal analysis. Students who used the technology were randomly selected, so the group of students who were not selected should be very similar to those who were. Because we would expect these groups to be equivalent before the trial, any difference in outcomes can be considered the effect of the technology. The study described in this article presents strong evidence on the effectiveness of this technology among these Chicago-area students.